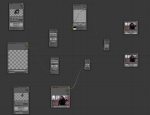

12/16/12

Read about the whole project at http://kstritzdpa.wordpress.com/

Here are the shots that I was responsible for.

Attack

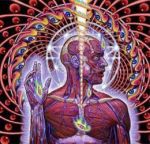

My job for the character design included modeling, texturing, rendering, and animating dollface. I used the image of the real doll to model dollface.

Then I painted a detailed UV map.

I used the particle hair renderer from Blender to create the hair. I tried to make it dynamic by sliding side to side as dollface is approaching the camera, but the tracker made the movement very bumpy which made the hair look strange. The hair , face, and background were rendered on their own separate layers as .pngs.

In order to track Gene’s face, I put a couple of post-it notes on the sides of his face but a few kept falling off. It worked out ok because I was able to track the corners of his eyes and mouth. Blender requires 8 markers to track and I had to do a lot of manual tracking.

For rendering the face, I used Blender’s new Cycles engine, which is basically a real-time GPU-based global illumination renderer. Luckily, I have a new video card with a ton of cores so it rendered pretty fast, even with 400 samples per pixel. However, the strand renderer is not supported in Cycles yet so I rendered a separate layer. Motion blur is also not supported in Cycles yet either so when I composited the hair, face, and background, I had to also render the meshes as well in order to get the motion vectors. I originally wanted to use .exr files, but they were too big and I was using dropbox so storage space was an issue.

Climbing A

The climbing shot was very difficult to track so I split it up into two parts. The first part only shows his hair as he rises above the hill. For this shot to work, I had to track the scene and the face. The scene markers made it so the alpha mask would follow the camera while the face markers set position and rotation keyframes for the face and hair. The green mesh is the mask in this shot.

Climbing B

This shot was also difficult because Gene’s nun outfit kept getting in the way of the markers. I went frame by frame to make sure the trackers followed correctly. The rotation wasn’t completely solved so I had to keyframe an animation where his face turns towards the camera.

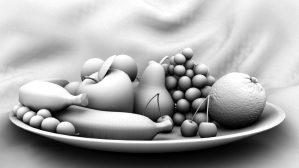

News Story A and B

Both of these shots were not too difficult to make but they did take a long time to render. Karen did the news report in front of the green screen and there was minimal green spill. I used Blender’s new node to key out the green screen and the translate node to scroll the text at the bottom. Luckily the camera was in 24p so I did not have to de-interalce the video. The background is a static image with the car and dollhouse super imposed on top. I composited some grain to make the background not look static.

Final News Shot

The final scene shows the empty room with the news story on it. All I had to do was translate, rotate, and scale the news story into the TV and did a little bit of color correction. It also has the scary face pop up at the end.

Editing

I was not tasked with editing, but it was not done in time so I took it up. I first tried to edit it in Final Cut even though I had never used it before. It was absolutely terrible compared to Blender’s video editor, so I quickly switched to Blender. For all of the outside shots, I put the frames through a node group, which is the same as a gizmo in Nuke, that handled the color correction, balance, vignette, and a slight lens distortion. I also had to cut out all the sound and tried to sync them with the video.

Then I made a quick title and credits screen.

Problems

The first major problem was that the camera switched itself to 1080 interlaced at 59.94FPS for the outside shots. We have no idea how it got switched back so I had to de-interlace and resample each video down to 24FPS. Tracking was also a big problem, but I was impressed with Blender’s motion tracking. It was able to solve pretty quickly and mapped the 3D models into 3D space almost automatically. I had my shots done a week ago, but the other shots were not done until the day it was due which left no time for editing. Rotopainting does take forever, but that should have been taken into account beforehand.

Conclusions

This was a very fun project, albeit a fairly ambitious short film. Karen’s storyboard and directing were great and the actors did a good job. I was not that impressed with the earlier scenes in the film and not happy that they weren’t done until the day before it was due. It was stressful to rapidly edit the film, but good experience. I also found that there was nothing that Nuke could do that Blender couldn’t do better and faster, which goes for Final Cut and Maya as well. I understand those tools are industry standard, I just think it’s funny how Blender is objectively better than all of them combined at no cost.